Mental Health AI Chatbot Project 2025

Project Topic: Mental Health AI Chatbot Project

Research Mentor: Prof. Ganesh Mani (Carnegie Mellon University)

PROJECT BACKGROUND

In such a challenging society where mental health related issues are at an all-time high, I watched how stress can turn from a feeling into a lifestyle. Among adolescents, anxiety burnout is so common or rather inevitable—yet access to real mental-health care was hard. Whether it’s cost, stigma, lack of accessibility or other social factors, I firmly believe that mental health should be accessible for everyone. Ever since the rise of AI chatbots and the use of AI in general and how powerful it can be at imitating humans, I began with a focused question: Can accessible AI chatbots credibly support the mental health and strain for teens who face cultural stigma, social barriers, or financial limits to traditional care?

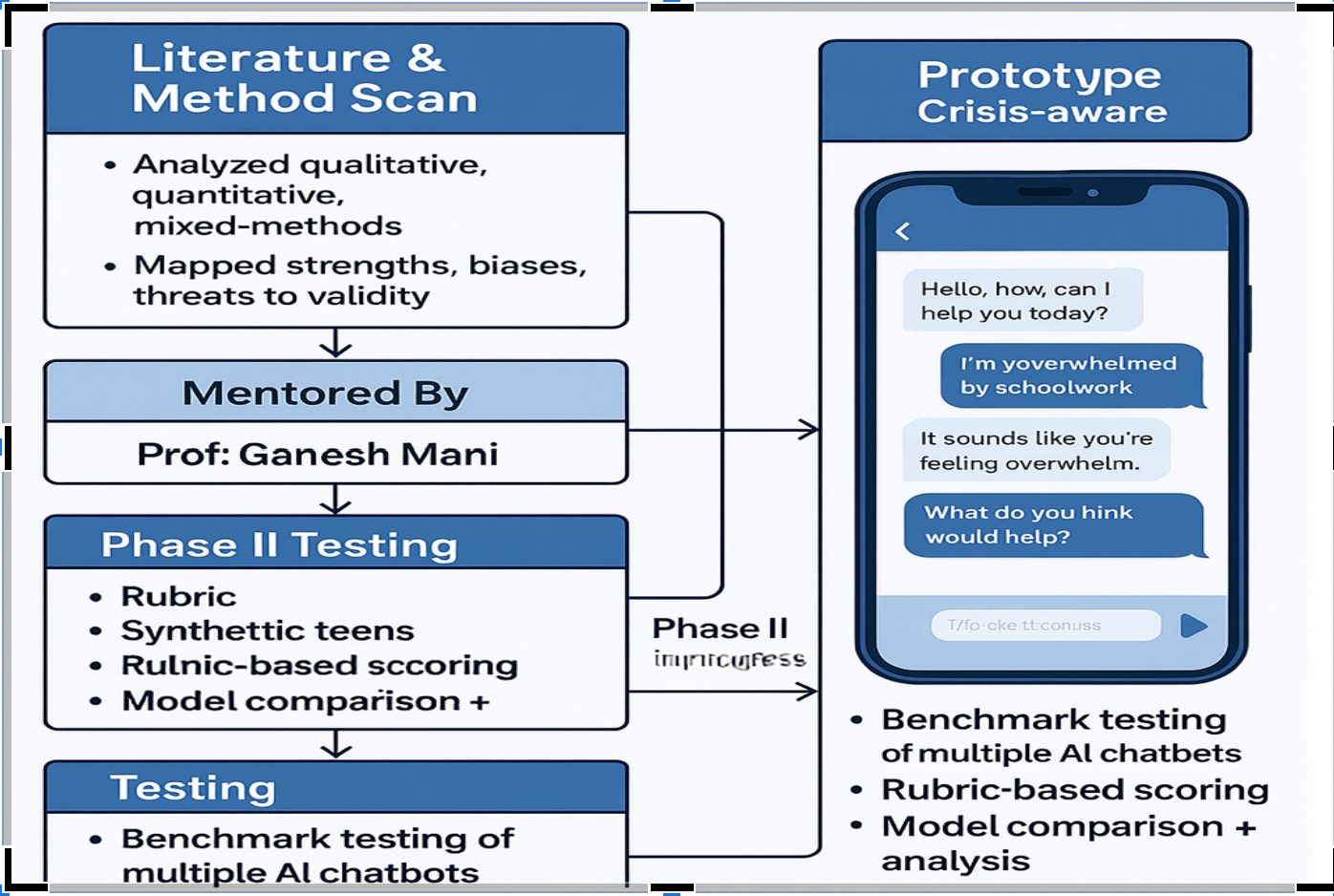

With guidance from distinguished faculty Professor Dr. Ganesh Mani (Carnegie Mellon University), I reviewed and analyzed a recent mixed-methods study comparing human therapists with large-language-model responses to therapeutic communication and used it as a template. I then surveyed widely available AI systems and built a four-step method:

- Create a concise checklist of counseling moves commonly used by school nurses and therapists—validation, open questions, concrete next steps, safety language, and referrals; to better judge the performance of each AI model

- Design synthetic teen/adolescent personas reflecting common prevalent issues in their daily lives (anxiety, family conflict, academic stress, sleep disruption) to avoid human-subjects risk

- Script standardized scenarios and collect full chatbot dialogues

- Score each exchange against the checklist, documenting strengths and failure modes (e.g., minimization, vague advice, missing crisis guidance)

After collecting my data, I will create/analyze a presentation (with data graphs) and scientific report of my research. This will include: my conclusions on my research, ranked AI model performance, and recommended prompts and systems for best usage. The project addresses access and quality gaps by identifying which AI tools most closely approximate supportive first-line communication for youth who cannot easily reach clinicians, advocating for more accessibility and investments in the mental health of youths—while clearly stating limits, emphasizing crisis-referral pathways, and positioning AI as a complement, not a substitute, for professional care. While I am currently working on the first phase of my research, I am hoping to move onto the 2nd phase of my research on the actual data collection, all while focusing on my goal of being able to create an AI prototype chatbox to eventually reach my goal of helping youths and adolescents in having more accessible mental health without them worrying about societal interference and pressure.

PROJECT DETAILS

- Analyzed previous AI research methods across qualitative, quantitative, and mixed-methods designs; mapped strengths, biases, and threats to validity in LLM mental-health studies

- Designed a mixed-methods study (modeled on peer-reviewed therapist vs. LLM work) under guidance from Prof. Ganesh Mani (Carnegie Mellon University)

- Phase I (design/pilots) completed; Phase II (full data collection/analysis) in progress, with conclusions forthcoming

- Built a 4-step evaluation method: clinician-inspired rubric (validation, open Qs, concrete steps, safety/referrals) → synthetic teen personas → standardized scenarios → rubric-based scoring

- Created synthetic teen personas reflecting common stressors among adolescents (academics, family conflict, sleep, social pressure) for standardized testing

- Engineered synthetic adolescent personas to safely simulate high-risk scenarios without human subjects or identifiable data

- Designed IRB-eligible, non-human-subjects personas enabling low-risk evaluation of AI support tools

- Built structured synthetic user profiles with scripted backstories and goals to control for variability in chatbot trials

Currently, testing multiple AI systems (completed full data collection on ChatGPT, DeepSeek, and Gemini) to evaluate comparative performance. The data analysis phase is currently in progress, synthesizing each system’s strengths and weaknesses into a prioritized remediation roadmap.

- Deliverables will include a scientific report and presentation featuring:

- A ranked model leaderboard

- A prompt best-practices playbook

- Data visualizations and comparative graphs

- Deployment guidelines with embedded crisis-escalation pathways

Additionally, engineering a crisis-aware, prototype AI-powered chatbot designed to augment (not replace) clinical mental-health care, incorporating safety guardrails derived from study findings.